Sound Waveform Generation Using 3D Vertex Data

Mentor 1

Kevin Schlei

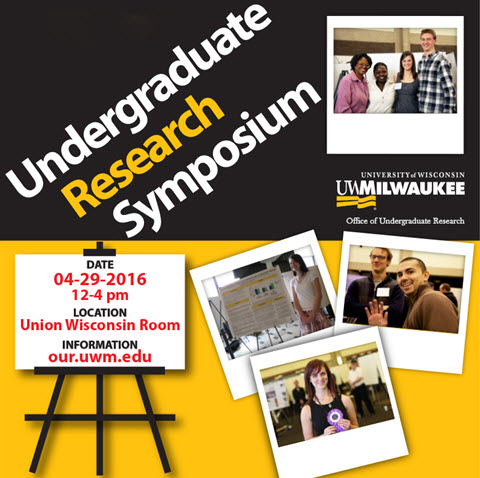

Location

Union Wisconsin Room

Start Date

29-4-2016 1:30 PM

End Date

29-4-2016 3:30 PM

Description

We worked on the implementation of a sound synthesizer using a waveform generation system based on 3D model vertices. Our system, built with the Metal API, reflects the GPU transformed vertex data back to the CPU to pass to the audio engine. Creative manipulation of 3D geometry and lighting changes the audio waveform in real time; these 3D model vertices are treated as a creative stream of audio or control data. This allows for the exploration of odd geometries, impossible shapes, and glitches each with a different sonic result. Changes to scene characteristics, like lighting, camera position, and object color, can also contribute and allows for instant changes in timbre when switching between fragment shaders in real-time. We developed a test mobile application to test the performance or the data streams and sonic results.The user interface allows for 3-axis rotation of a model and translation away from the center point. A simple momentum system lets the 3D model be ’thrown’ around the world space and slider sets the wavetable oscillation frequency.

Sound Waveform Generation Using 3D Vertex Data

Union Wisconsin Room

We worked on the implementation of a sound synthesizer using a waveform generation system based on 3D model vertices. Our system, built with the Metal API, reflects the GPU transformed vertex data back to the CPU to pass to the audio engine. Creative manipulation of 3D geometry and lighting changes the audio waveform in real time; these 3D model vertices are treated as a creative stream of audio or control data. This allows for the exploration of odd geometries, impossible shapes, and glitches each with a different sonic result. Changes to scene characteristics, like lighting, camera position, and object color, can also contribute and allows for instant changes in timbre when switching between fragment shaders in real-time. We developed a test mobile application to test the performance or the data streams and sonic results.The user interface allows for 3-axis rotation of a model and translation away from the center point. A simple momentum system lets the 3D model be ’thrown’ around the world space and slider sets the wavetable oscillation frequency.